Where are we going in 2024 with LLM AI?

Predicting future is hard, but some LLM AI trends seem to emerge

TLDR; LLM AI have given few trends: (i) small expert models fine-tuned for specific task perform extremely well, as such 2024 should be a year where this takes of individually and as a combination of few models. (ii) given the performance of recent agents LLM planning could be potentially solved with one of those newer models. (iii) new LLM architecture may emerge as SOTA as there is no specific reason why not compressing context like attention should be a theoretical best.

What sticks out?

In 2023 LLM AI have through open-source shown relatively good performance vs closed source LLM like OpenAI. While we can look this statement with skepticism the general truth is that open source does not lag too much to closed source LLMs when relatively compared with ELO ratings:

Yes, GPT-4 Turbo is way up there with 100 elo points lead but it is NOT a 1000 point lead like Magnus Carlsen vs everyone else in chess (intentional overstatement for effect).

The one thing that stick out is the Mixtral 8x7b performance: the mixture of small models is sufficient to come close to OpenAI performance. Which imposes as potential question: can a fine-tuned small model really perform well?

YES. If we look at the fine tuned model for learning Chain of Density Prompting to better summarise text we find a interesting conclusion (https://blog.langchain.dev/fine-tuning-chatgpt-surpassing-gpt-4-summarization/).

Performance:

Price:

So from a business standpoint, fine-tuned model is far better as the performance is comparable to a complex model AND price is far smaller. As such the market should converge to fine-tuned models.

What is less solved but could be?

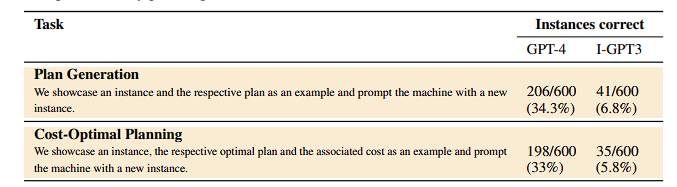

LLM planning is one thing that sits out there as a problem not really solved. Usually this is complicated as it needs a complex reasoning knowledge AND domain knowledge to be efficient. As such Auto-GPT and BabyAGI did not really work all that well on complex tasks.

Nevertheless, in theory it should be able to be solved with an LLM Agent framework. This already has some decent performance behavior with OpenAI code interpreter AND VertexAI new code assistant currently in testing.

A good paper from Nov-2023 that discusses various LLM planning issues is here: https://arxiv.org/pdf/2206.10498.pdf

As such, 2024 could be year where planning gets better in performance as more and more research is put into it.

Finally, attention mechanism while great has a big issue with quadratic scaling with respect to the window length. There are good recent discoveries moving this issue into a linear problem space. Notably:

Mamba a Dec-2023 paper (https://arxiv.org/abs/2312.00752) showing that linear space can be attained. There are other papers out there but Mamba is the first linear-time sequence model that truly achieves Transformer-quality performance in final evaluation.

As a result OpenAI in under immense threat that GPT-4 current architecture will become obsolete very soon in 2024. This means other firms that have enough capital may start training new models with the new architecture to test if they can surpass GPT-4 performance.

Final verdict?

2024 should be closely turbulent as 2023 was. Many on the current SOTA are under threat to be replaced and surpassed, and even small models seem to make a lot of business sense. The real problem is how to make AI business resilient in such a rapid changing environment?

One thing is to find acceptable cost with acceptable performance and start from there. Find LLMs that can provide that sort of price per value. Fined-tuned LLMs seem to already perform this on a lot of tasks.